DLT: Conditioned layout generation with Joint Discrete-Continuous Diffusion Layout Transformer

ICCV 2023

Abstract

Generating visual layouts is an essential ingredient of graphic design. The ability to condition layout generation on a partial subset of component attributes is critical to real-world applications that involve user interaction. Recently, diffusion models have demonstrated high-quality generative performances in various domains. However, it is unclear how to apply diffusion models to the natural representation of layouts which consists of a mix of discrete (class) and continuous (location, size) attributes. To address the conditioning layout generation problem, we introduce DLT, a joint discrete-continuous diffusion model. DLT is a transformer-based model which has a flexible conditioning mechanism that allows for conditioning on any given subset of all the layout component classes, locations, and sizes. Our method outperforms state-of-the-art generative models on various layout generation datasets with respect to different metrics and conditioning settings. Additionally, we validate the effectiveness of our proposed conditioning mechanism and the joint continuous-diffusion process. This joint process can be incorporated into a wide range of mixed discrete-continuous generative tasks.

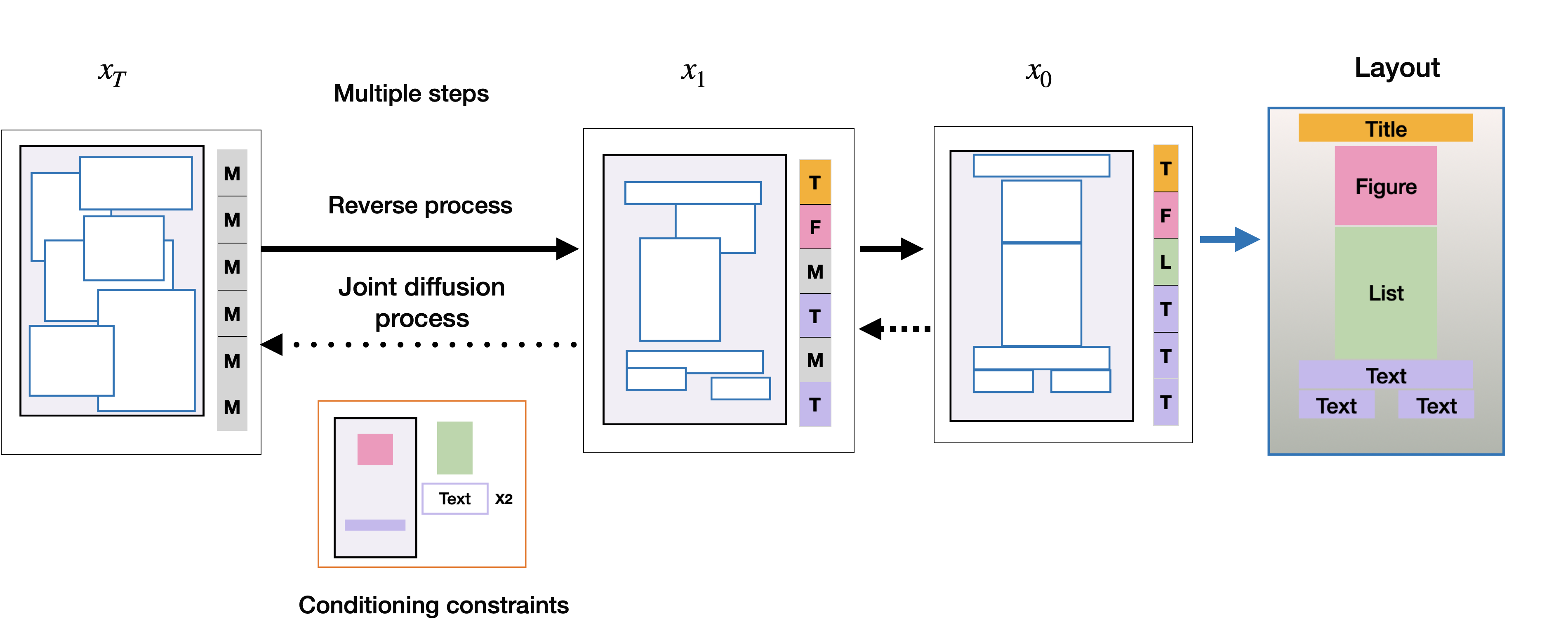

Joint Discrete-Continous Diffusion Process

We propose a framework for generating layout, document, and graphic designs using a transformer encoder and a diffusion model architecture. It supports both discrete and continuous attribute generation.

The diffusion process is applied jointly on both the continuous attributes of the components (size and location) by adding a small Gaussian noise, and on the discrete attributes of the components (class) by adding a noise that adds a mass to the Mask class. The reverse diffusion model can be conditioned on any given subset of the attributes.

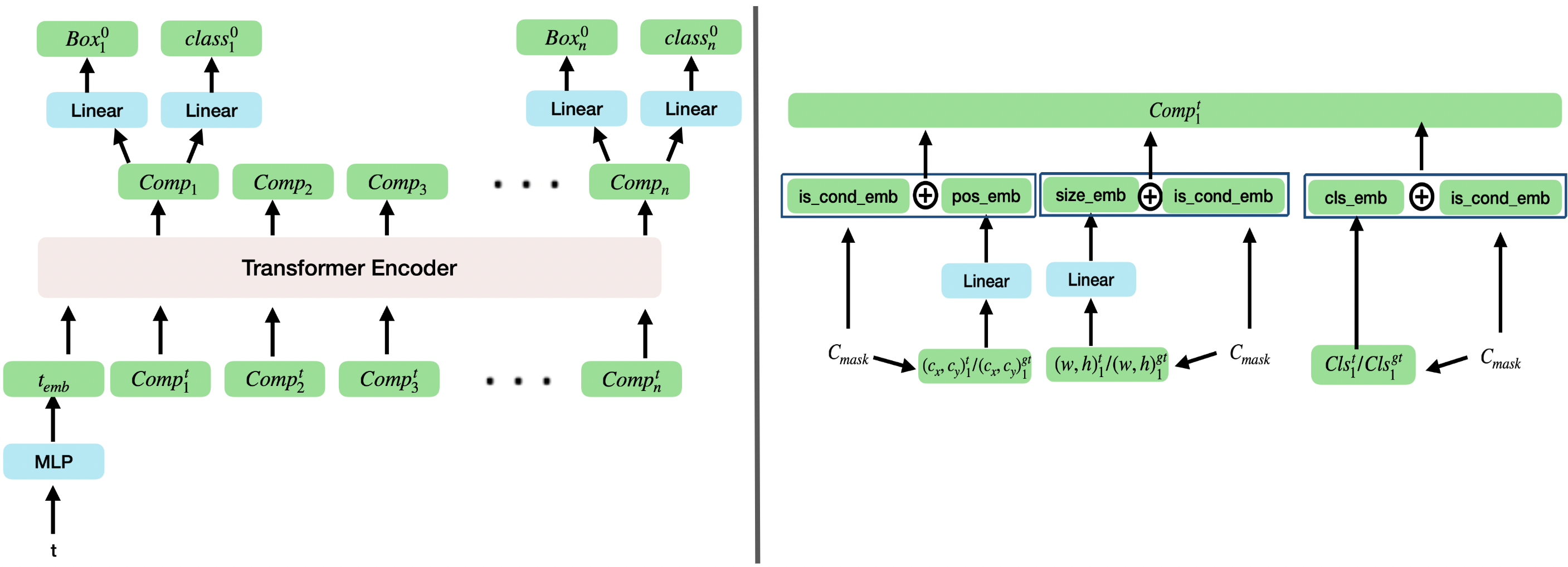

Architecture Details

The transformer is fed with an embedding that represents step t of the diffusion process and an embedding for each of the layout components. The output is the cleaned coordinates and classes of components. During inference, the output is noised back to step t-1.

The component embedding consists of a concatenation of position embedding, size embedding and class embedding. Each one of the three sub-embeddings can be either generated from the diffusion process or from the conditioning information. The model is explicitly informed which part of the input is part of the diffusion process by adding a trainable vector to the sub-embedding in case the attribute is part of the conditioning. This condition embedding is separate for each attribute.

Results

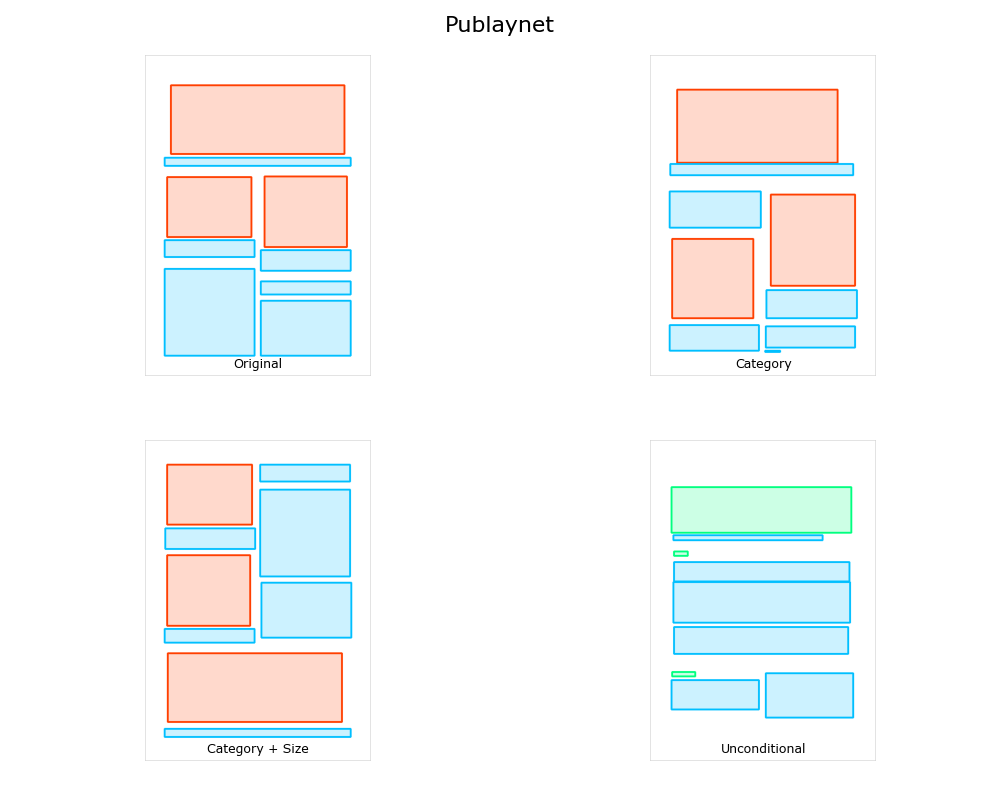

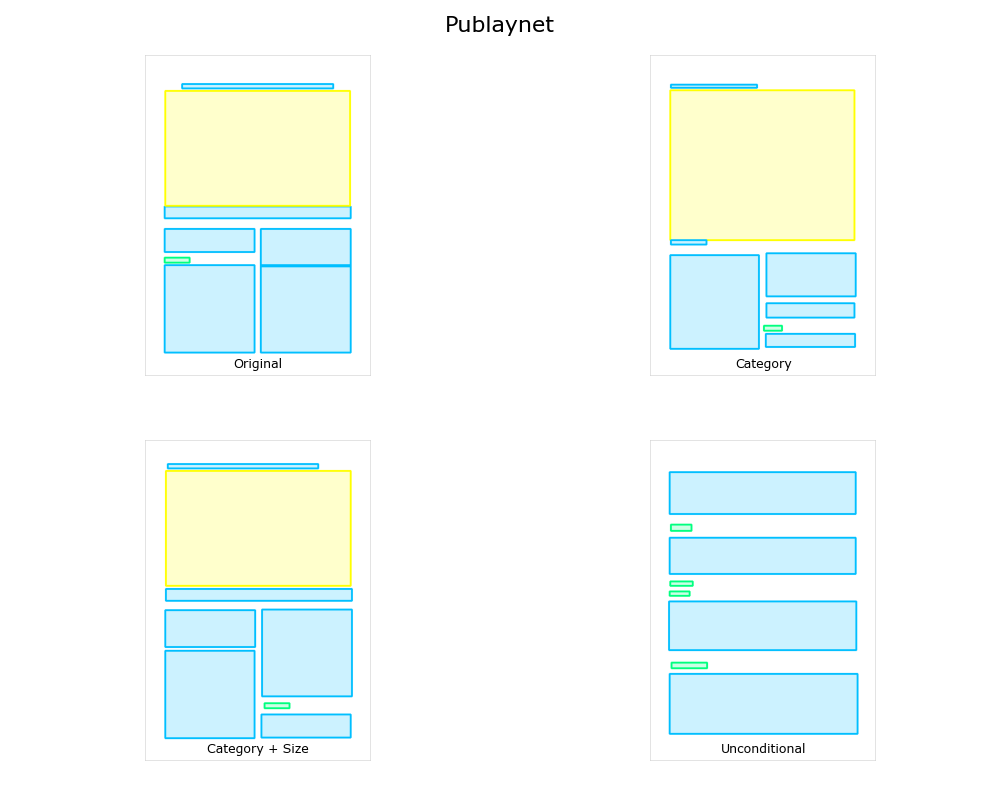

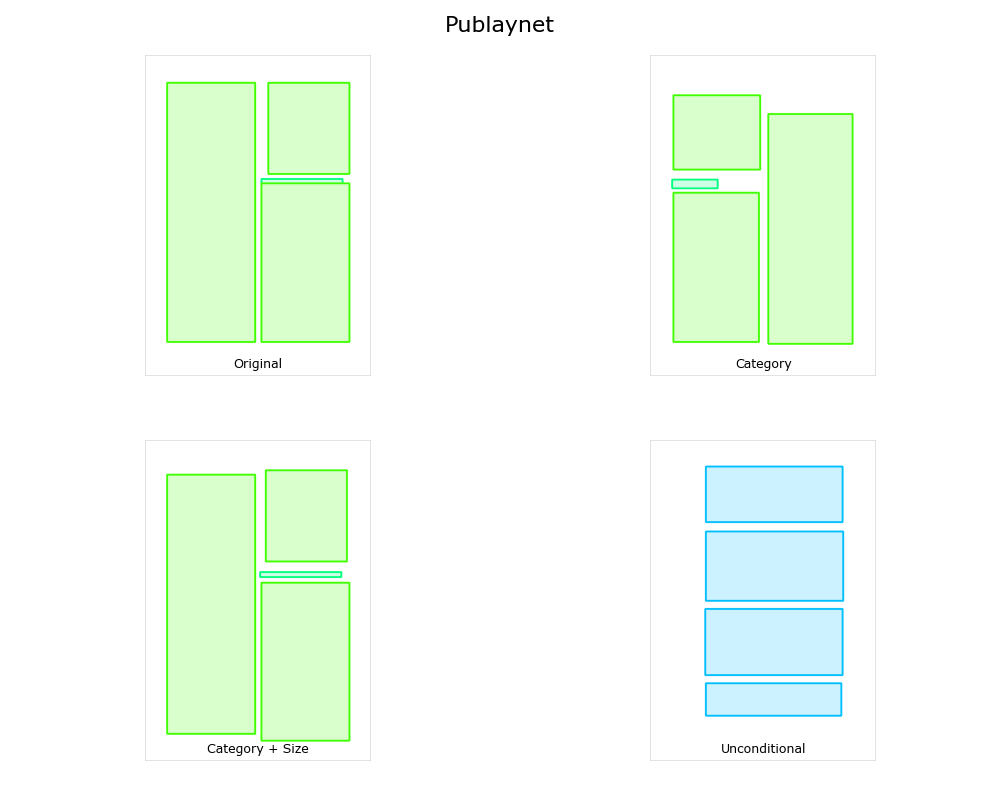

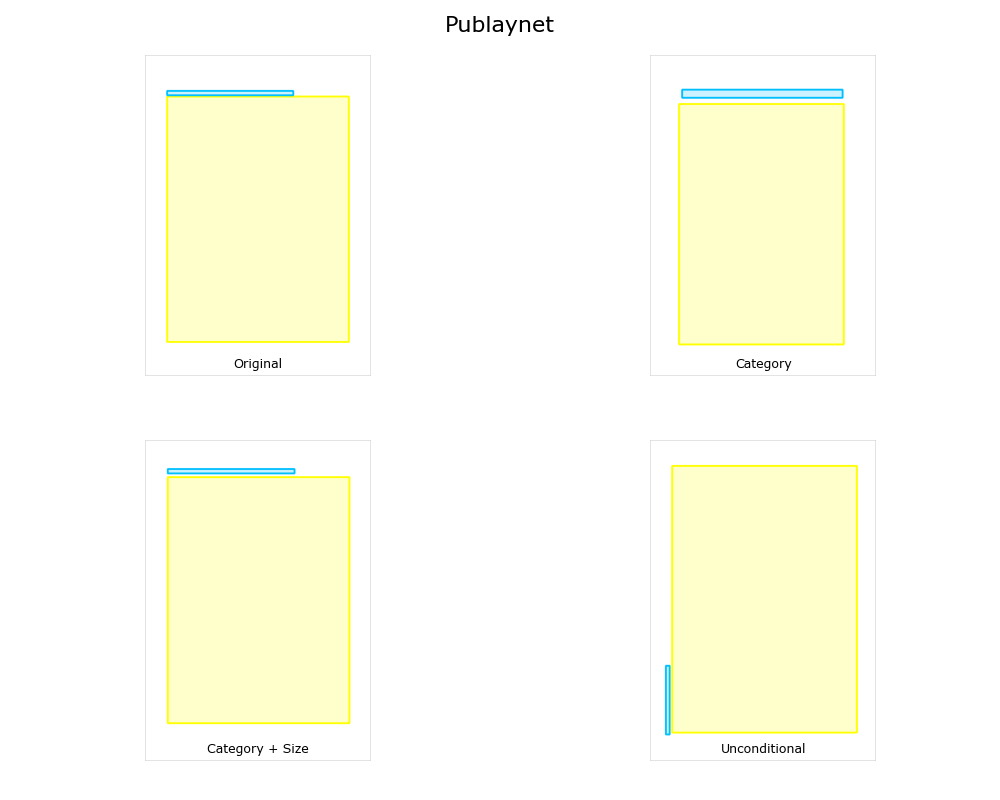

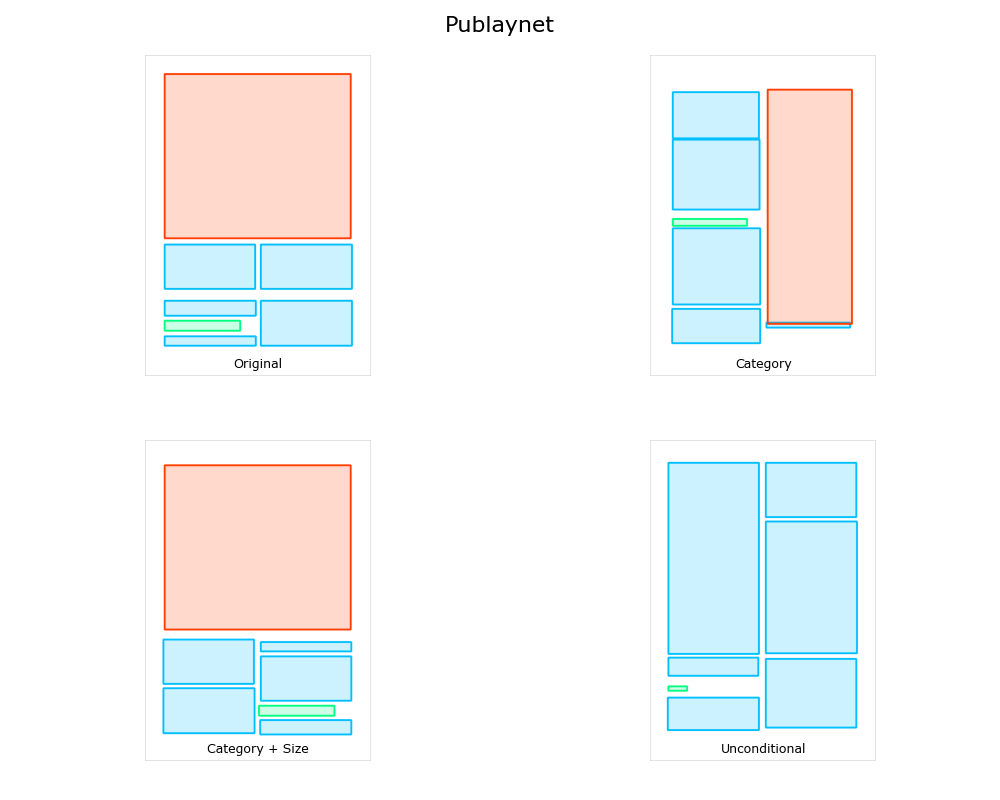

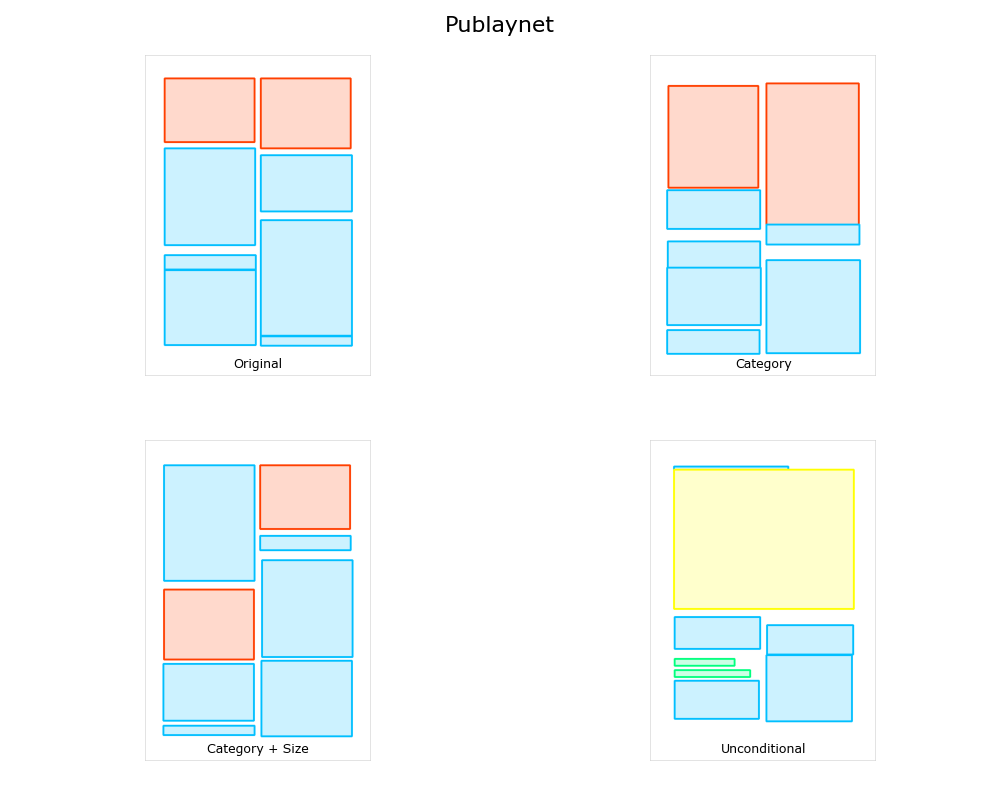

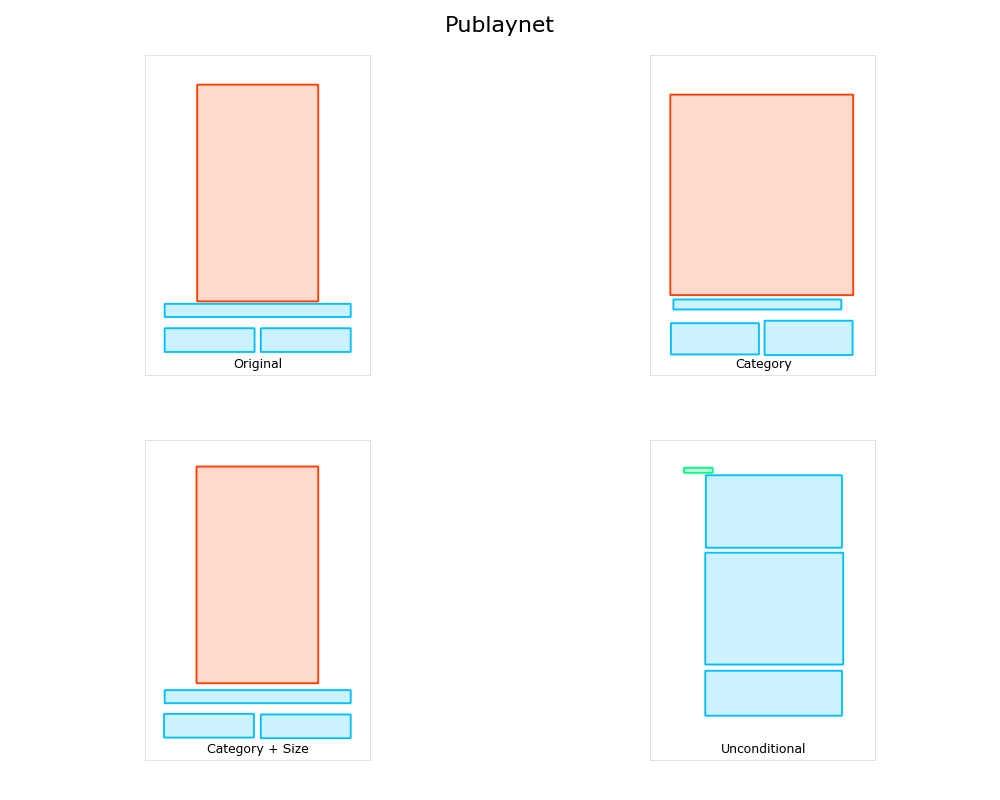

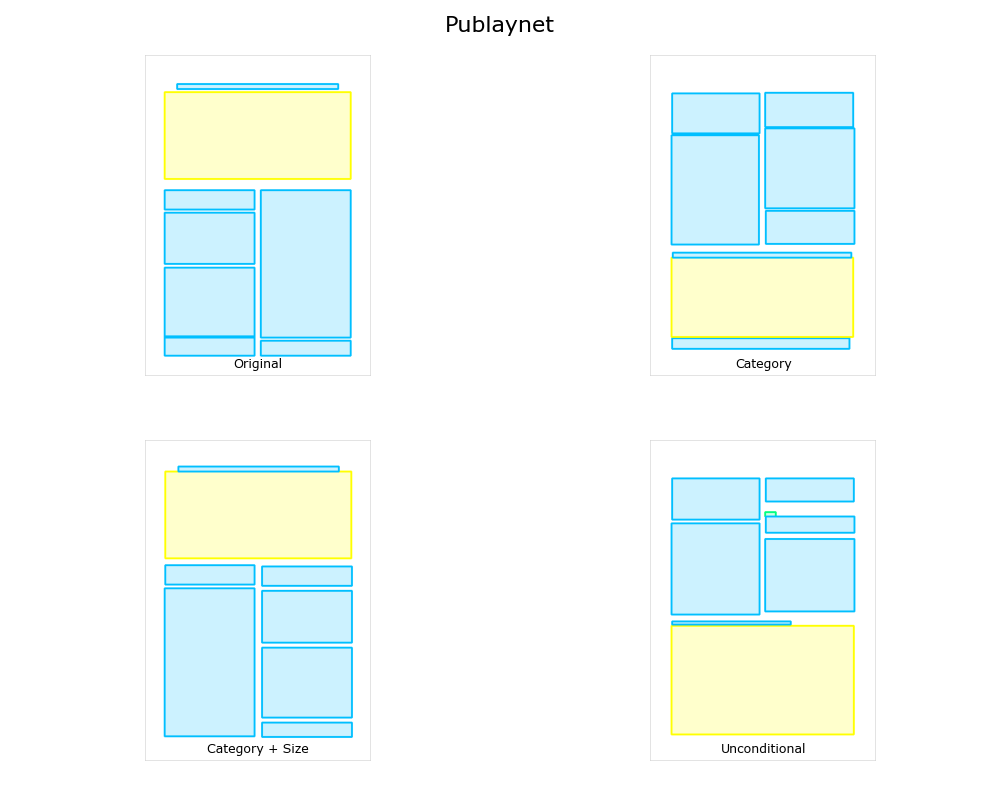

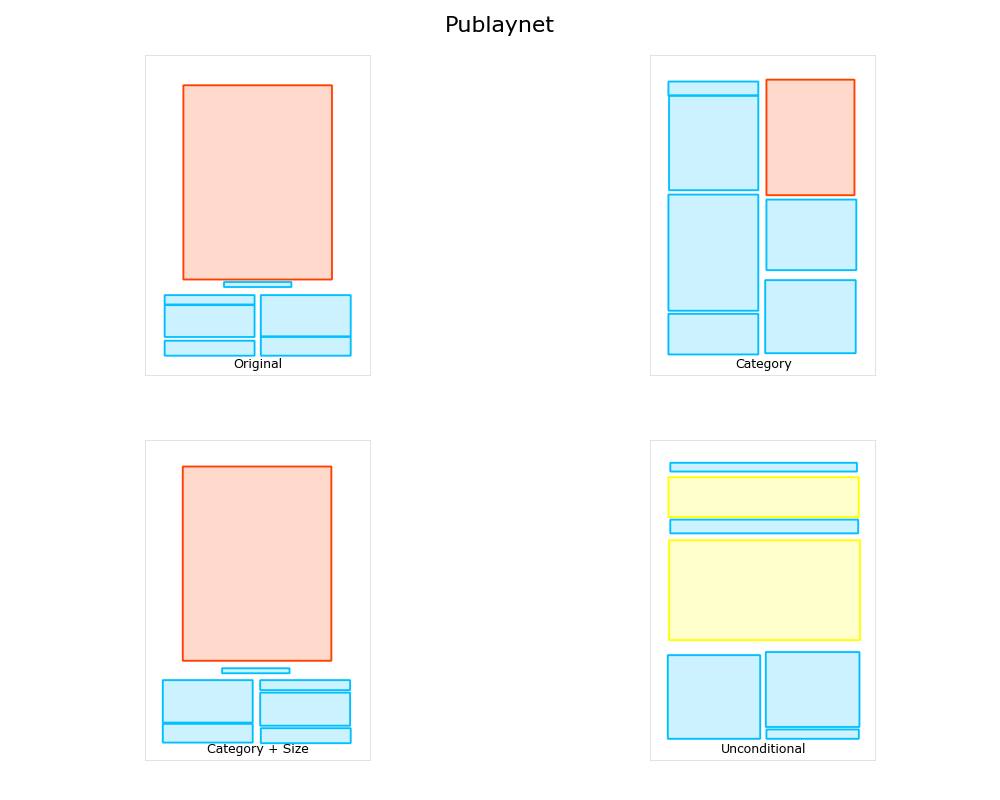

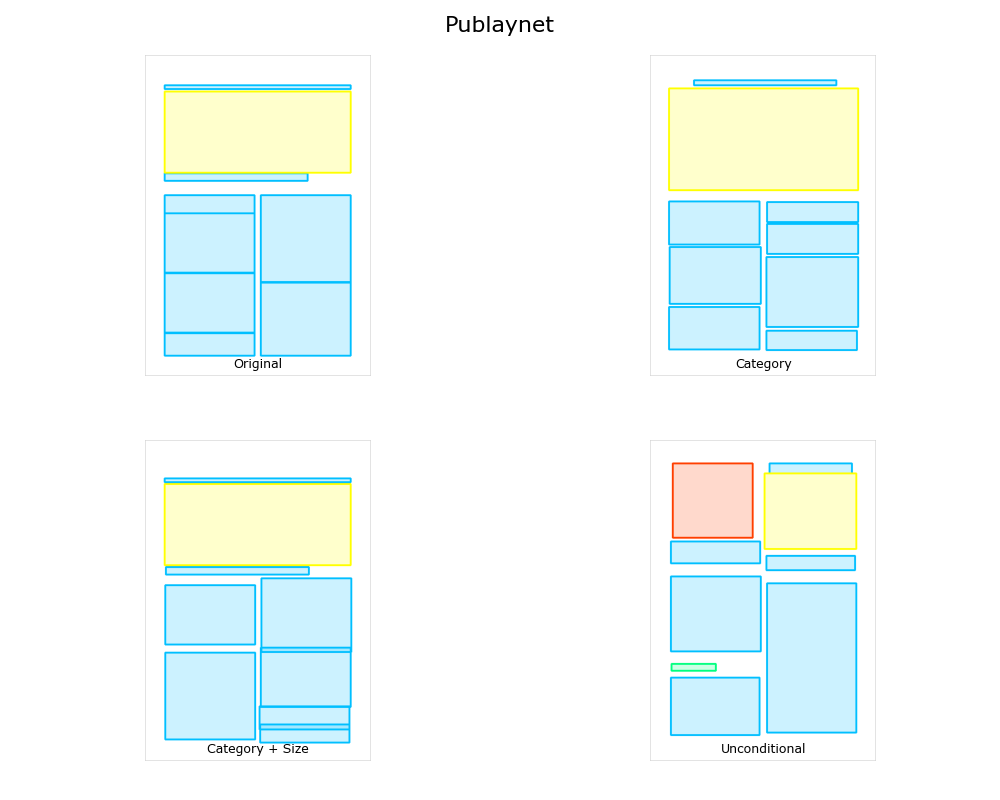

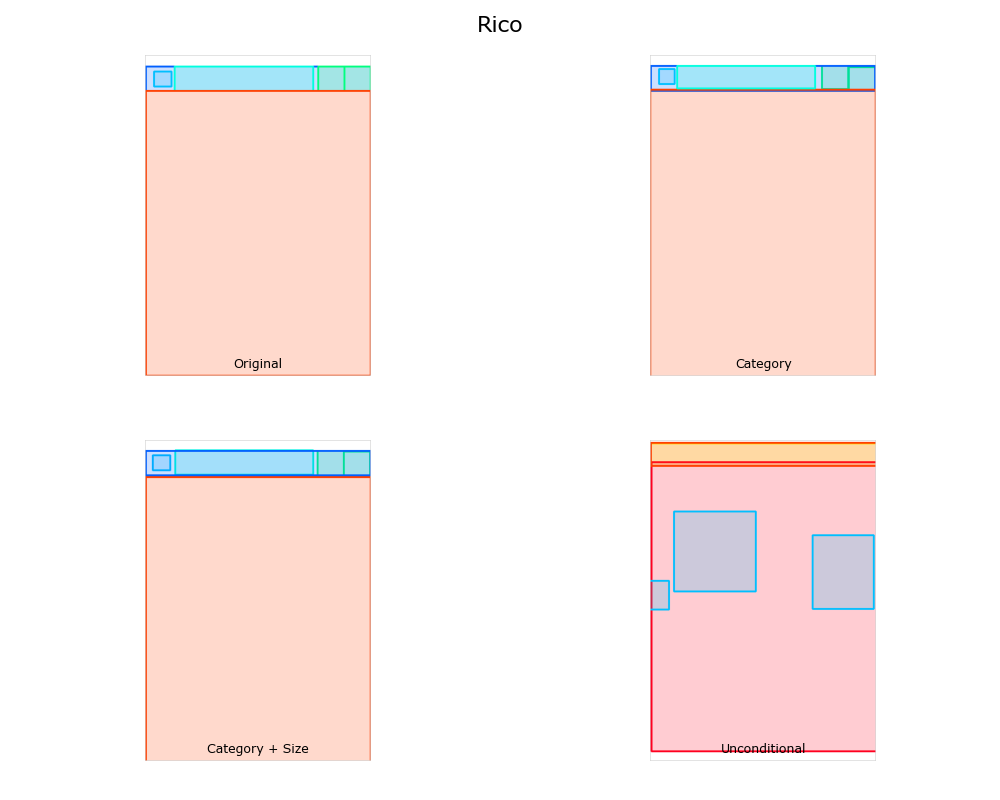

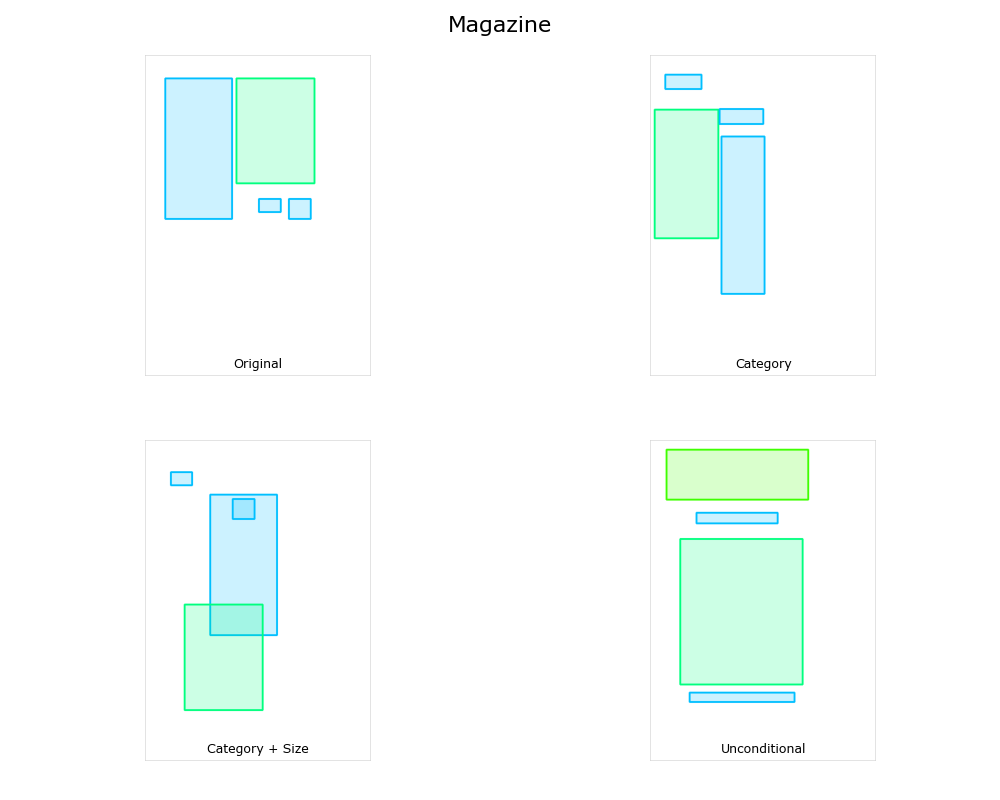

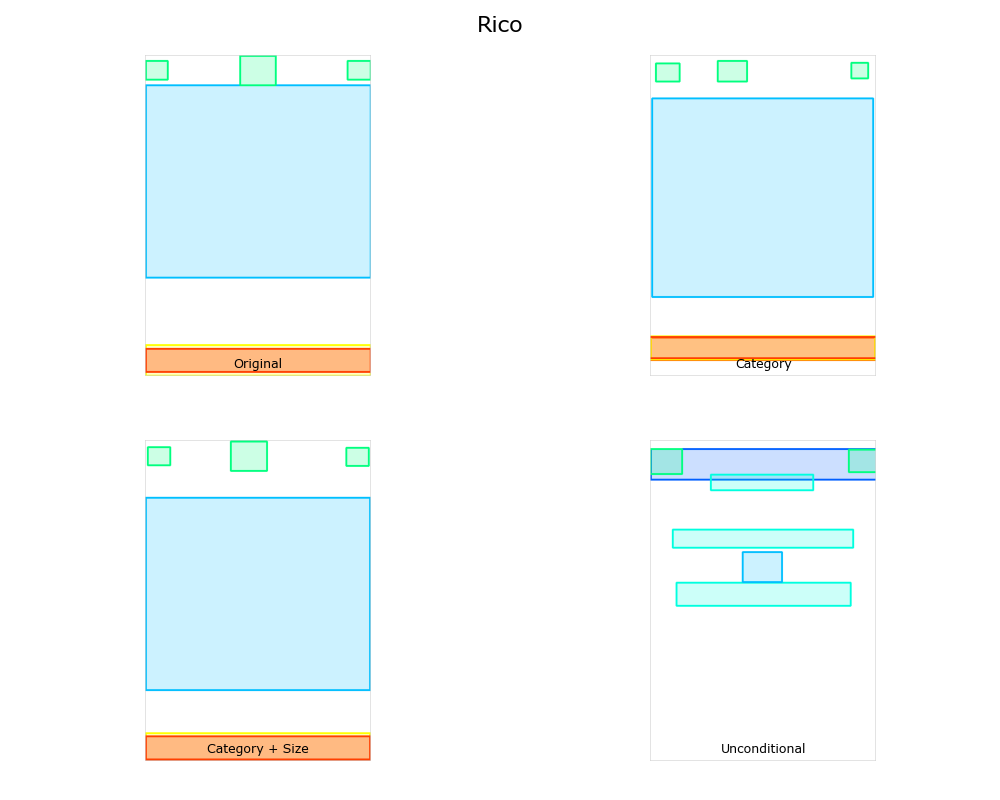

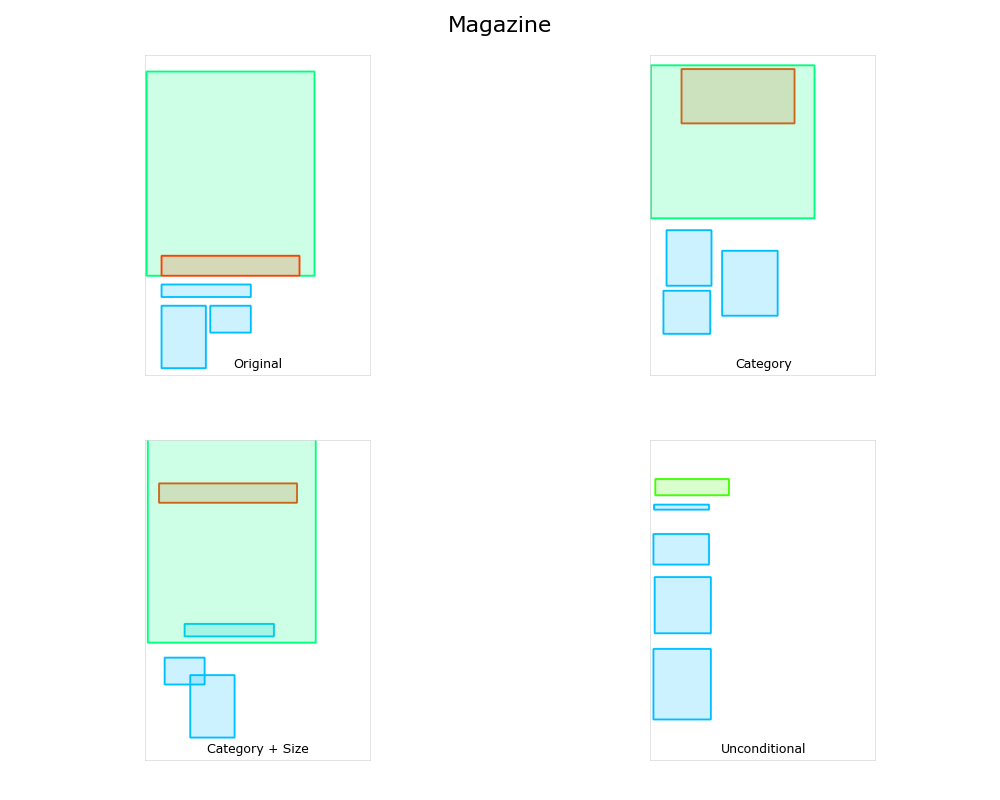

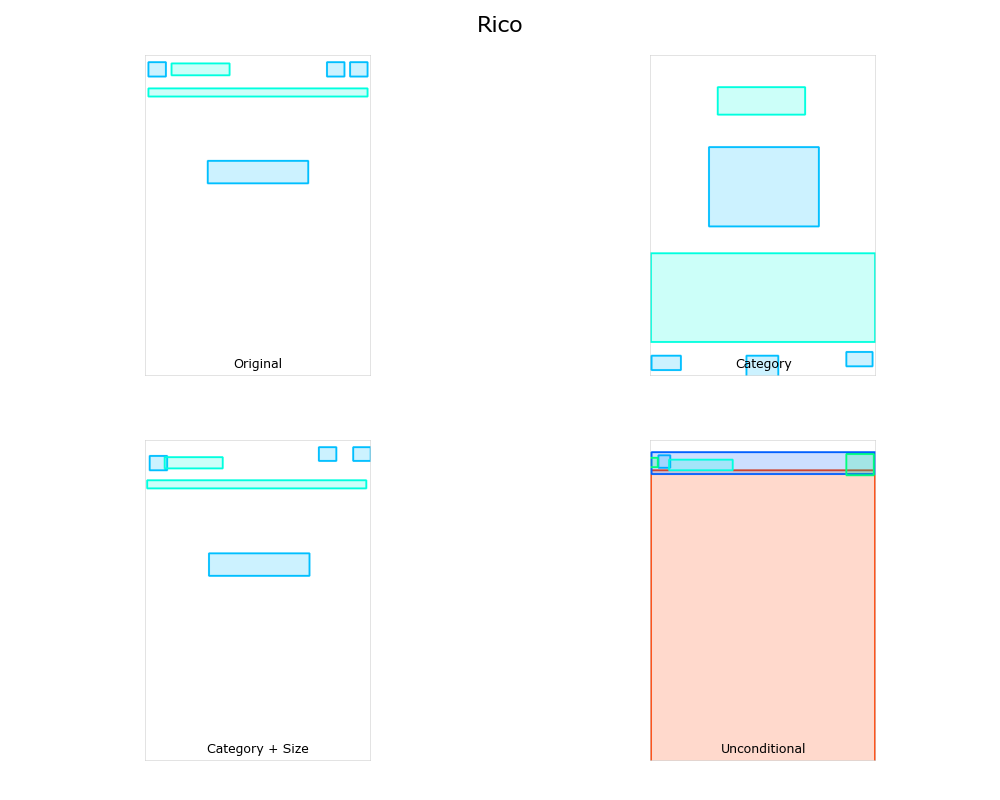

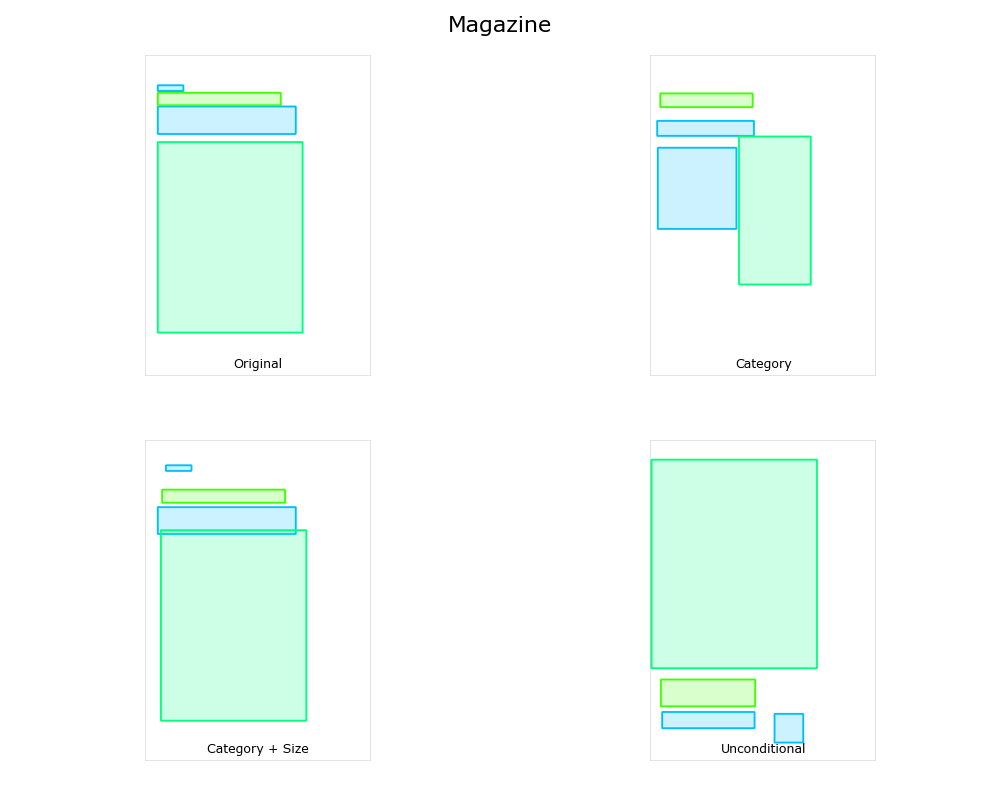

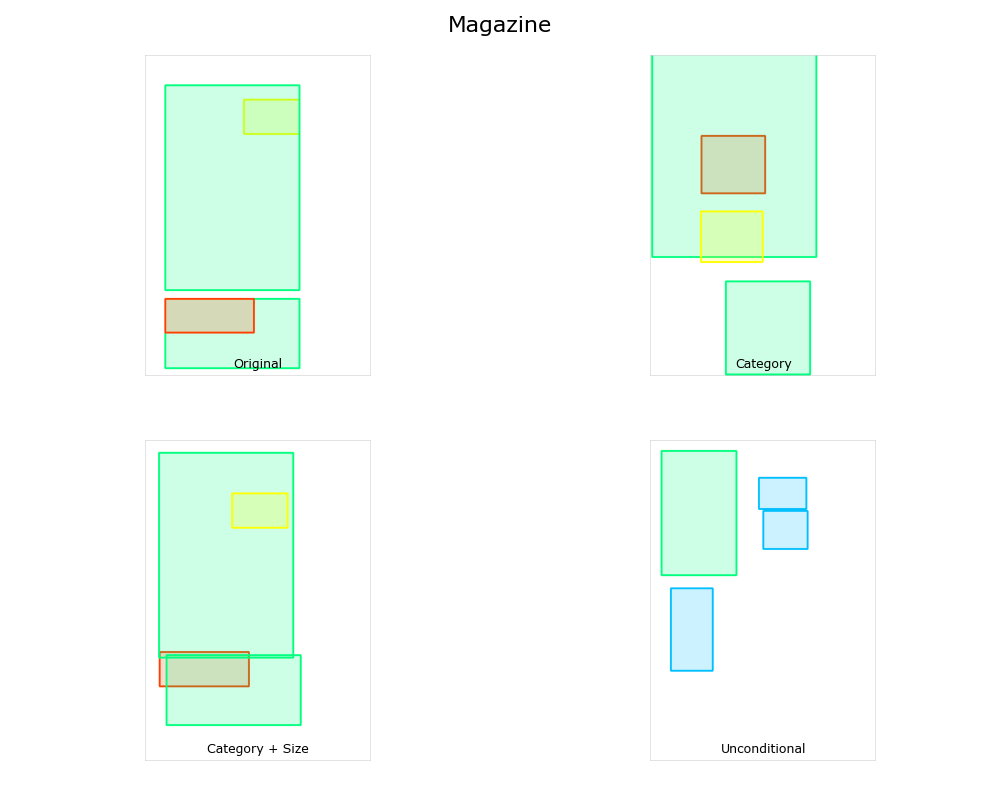

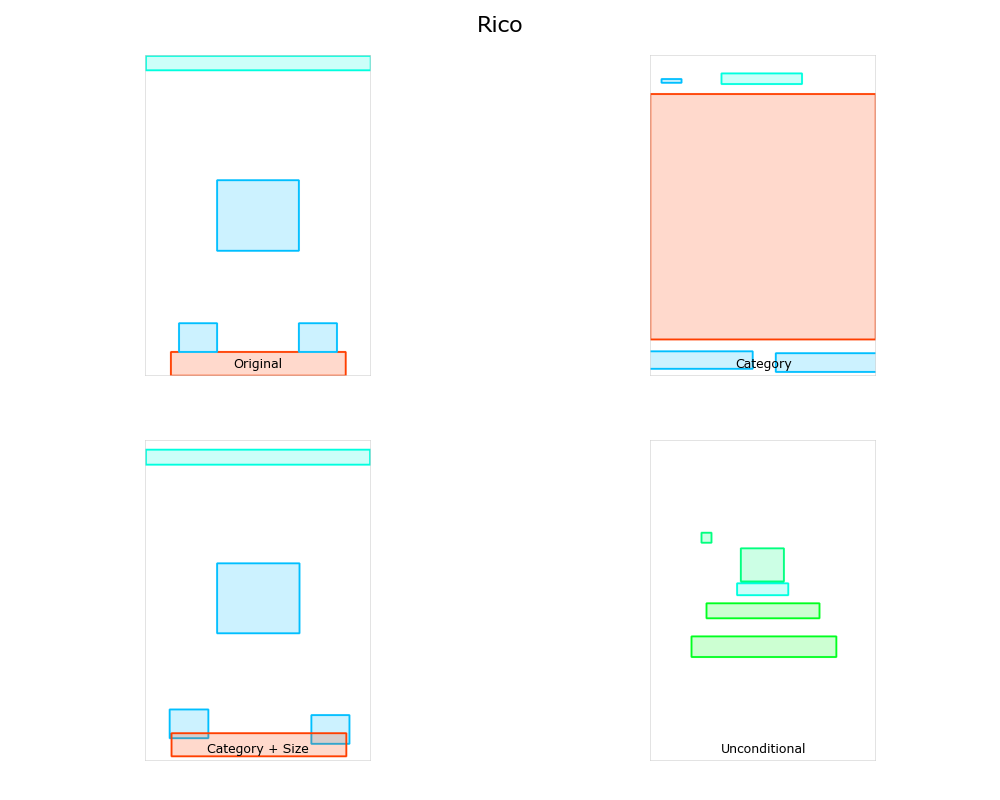

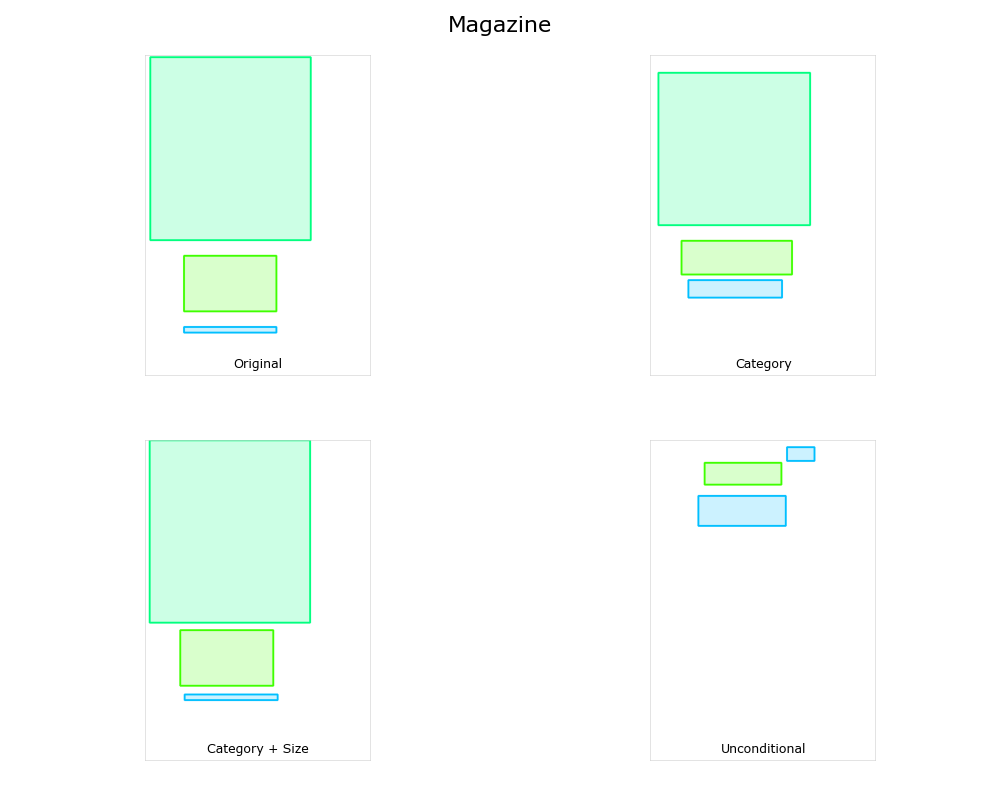

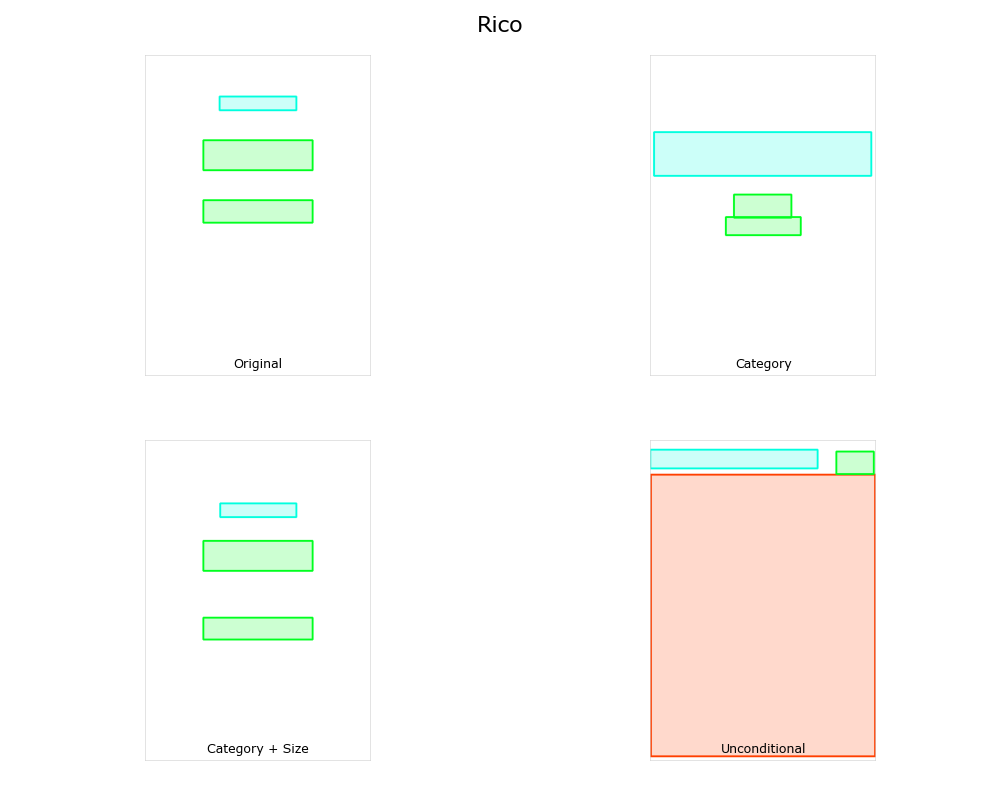

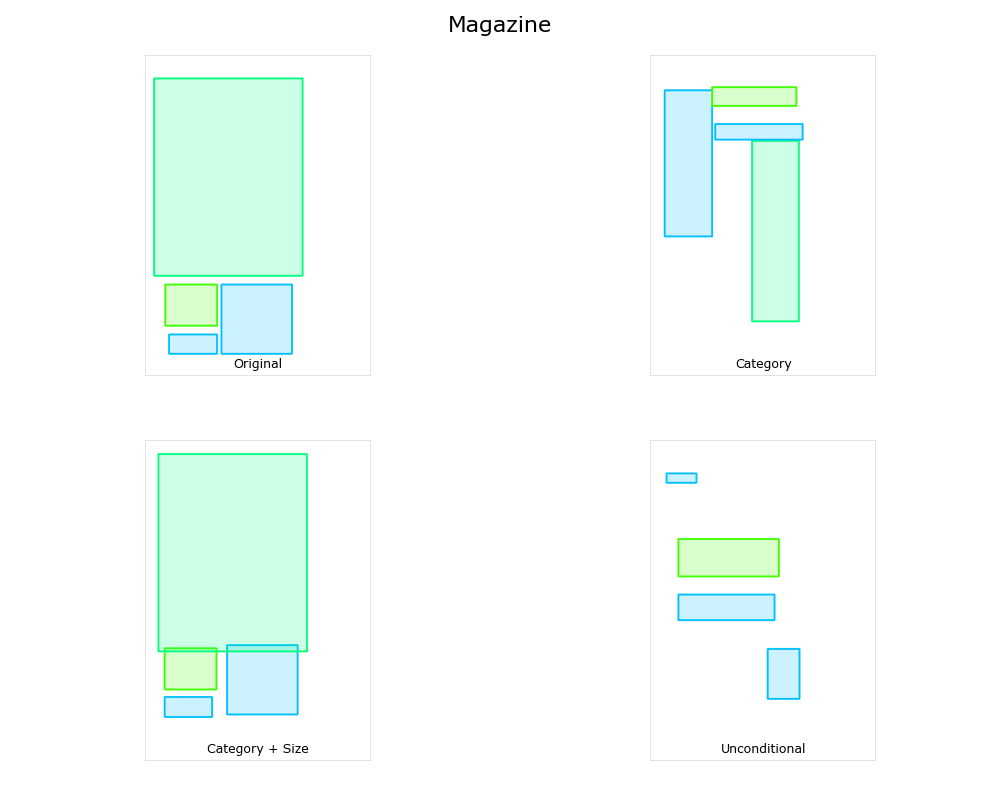

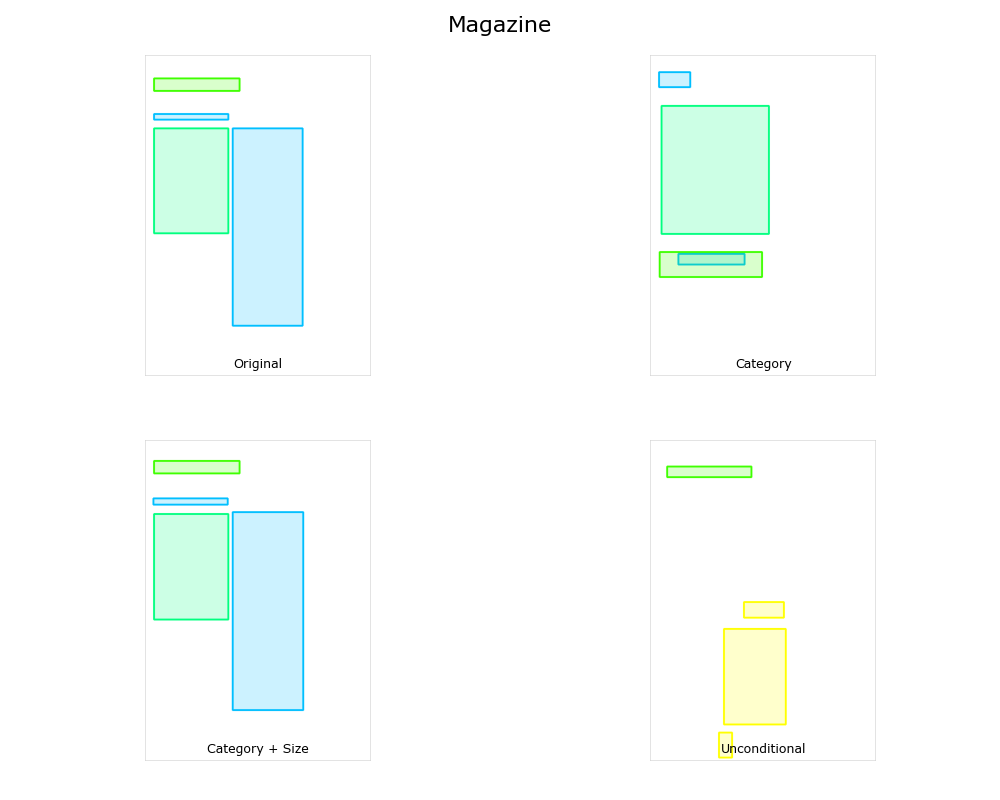

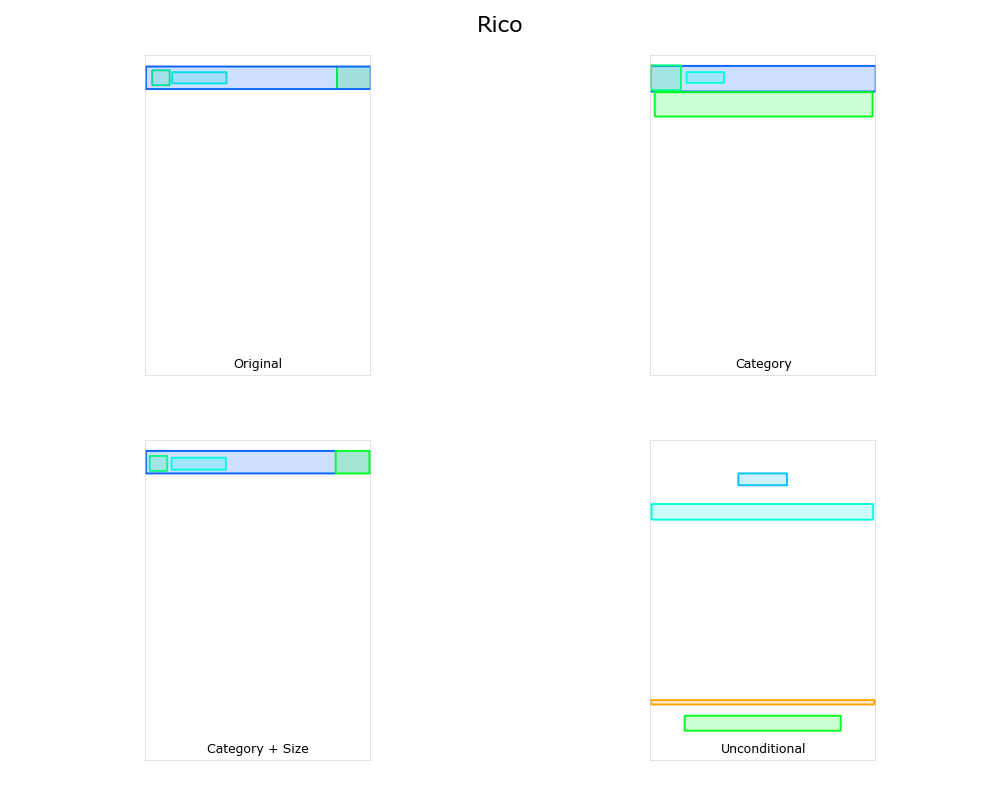

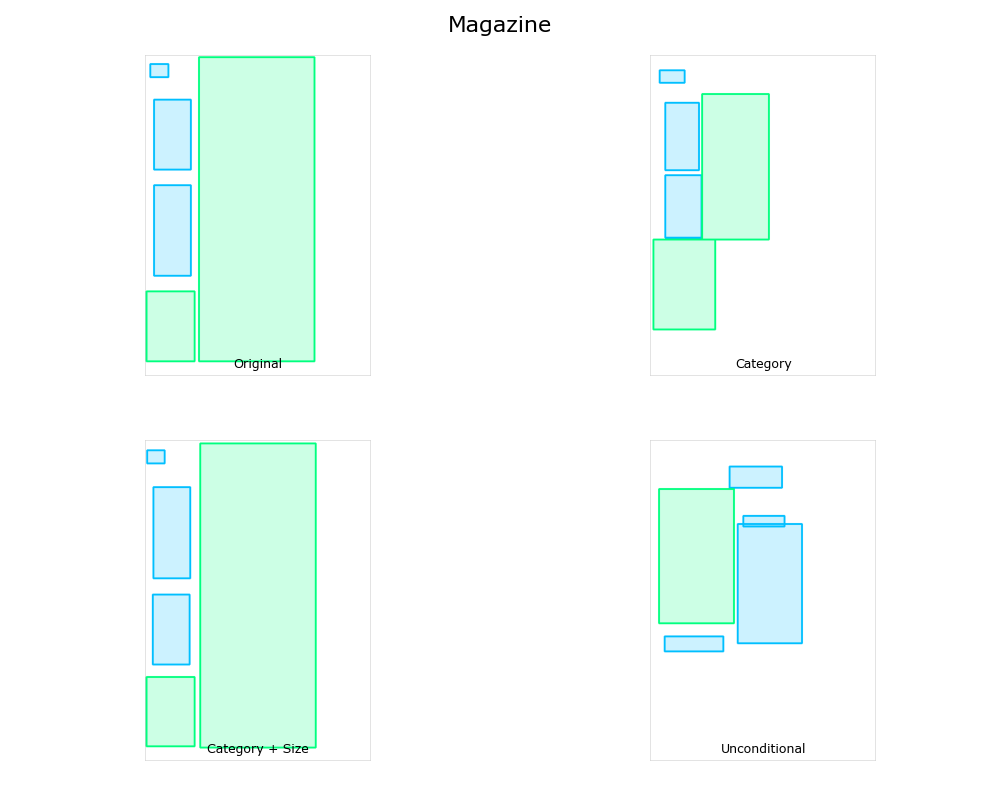

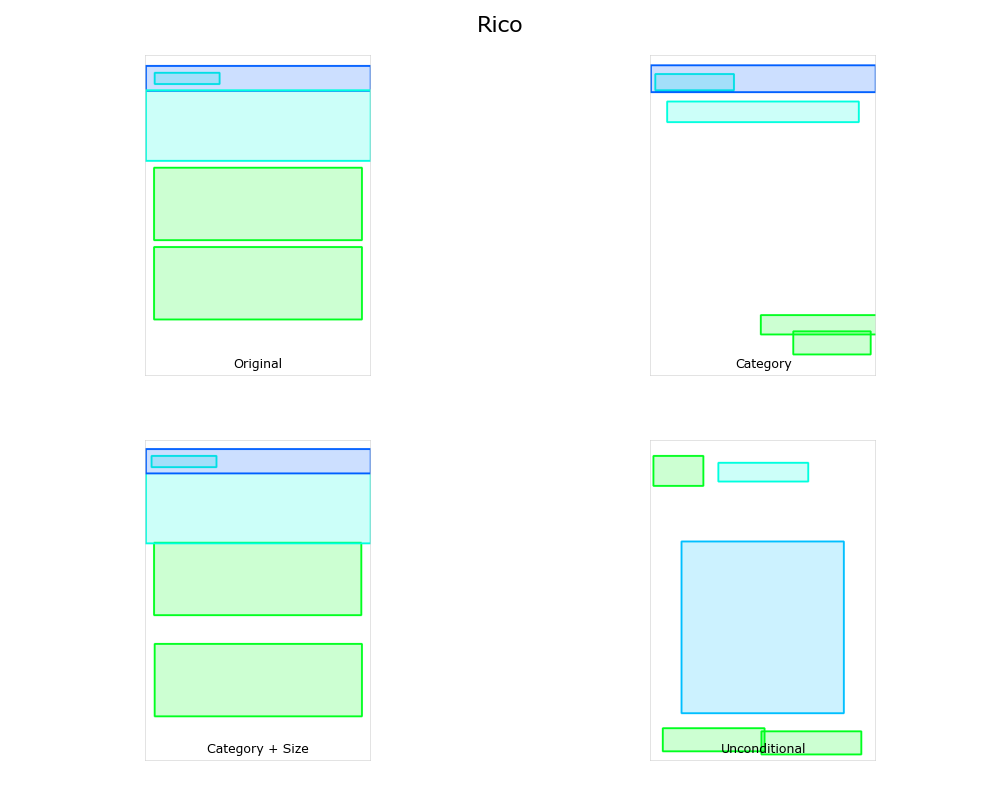

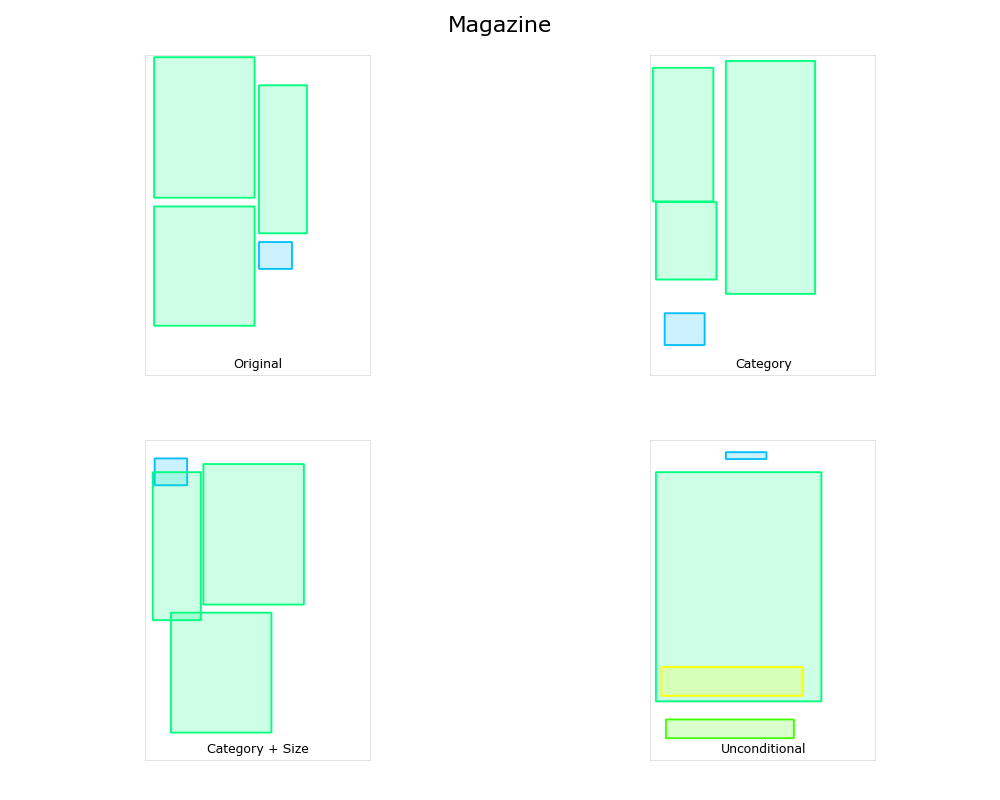

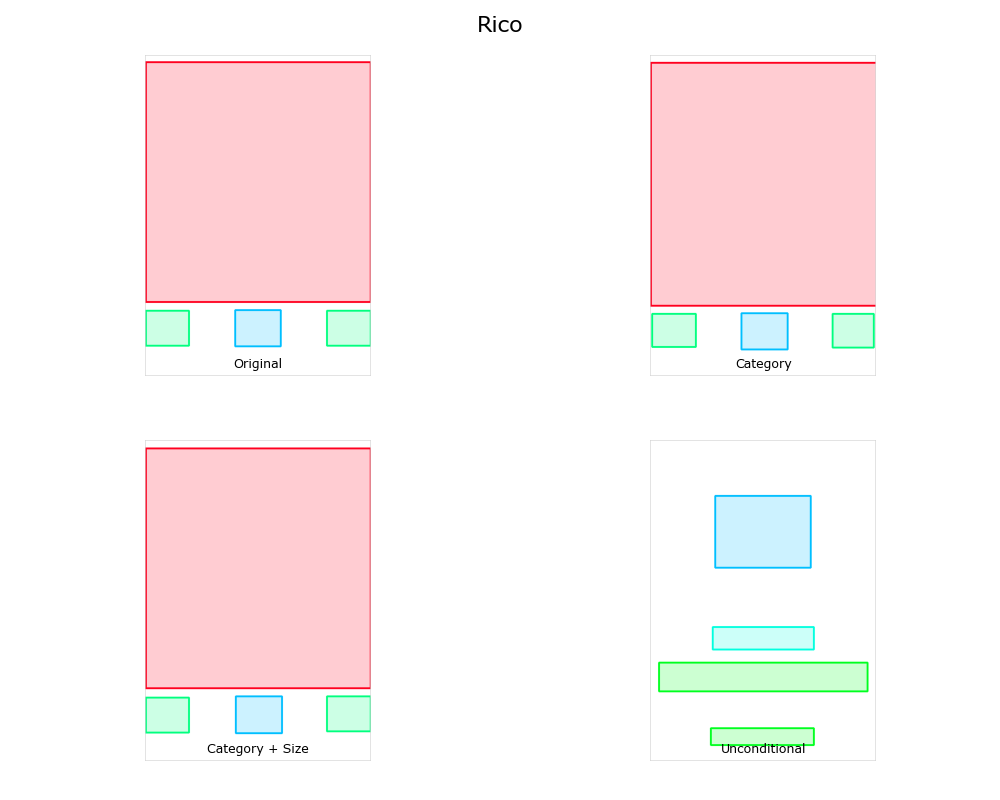

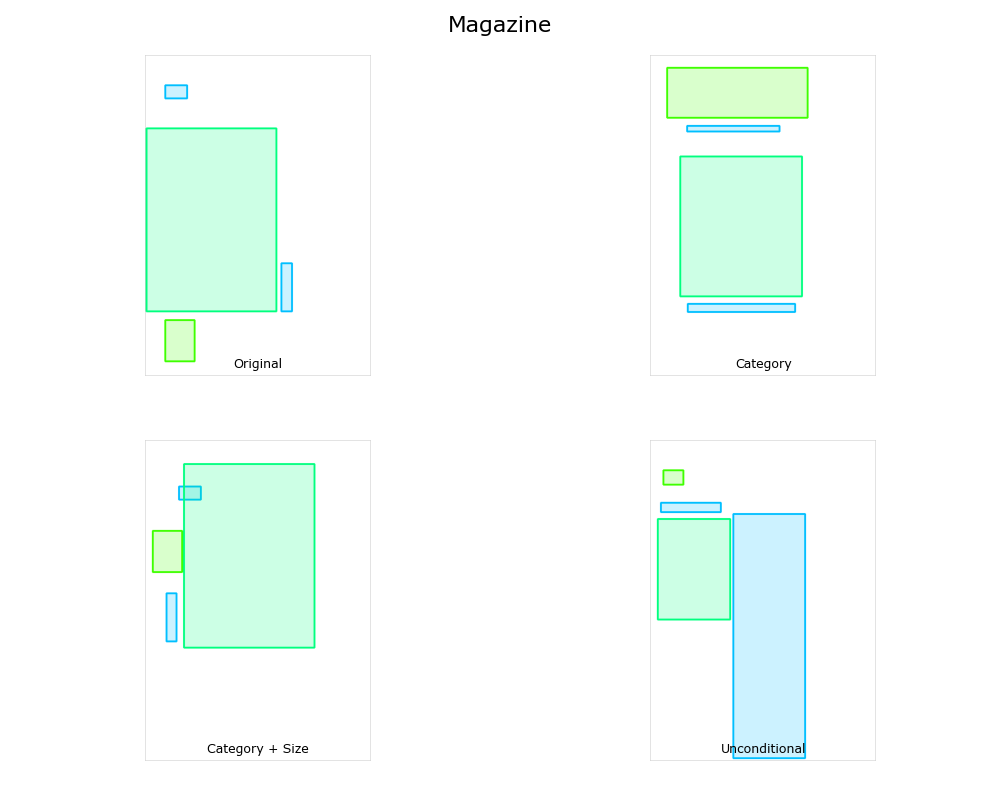

Qualitative comparison of generated layouts between all tested methods on PubLayNet, Rico and Magazine datasets. The top left image is the ground truth layout, that we used as a condition for Category (top right) and Category + Size (bottom left) settings. The bottom right image is the generated without any conditioning.

Citation

@misc{levi2023dlt,

title={DLT: Conditioned layout generation with Joint Discrete-Continuous Diffusion Layout Transformer},

author={Elad Levi and Eli Brosh and Mykola Mykhailych and Meir Perez},

year={2023},

eprint={2303.03755},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Acknowledgements

The website template was borrowed from Mip-NeRF 360 and LayoutDM.